Table of contents

The future of AI hinges on data. Data is the raw material that fuels machine learning algorithms, enabling them to learn, adapt, and make intelligent decisions. Without vast amounts of high-quality data, AI learning algorithms are like engines without fuel — full of potential but unable to function.

However, gathering this data is fraught with challenges: privacy issues, inherent biases, even simple scarcity. Synthetic data offers a powerful solution by providing artificial, yet realistic, data that can be used to train AI models without compromising privacy and working around scarcity.

- This post explores:

- What synthetic data is

- Why synthetic data is important

- How synthetic data is generated

- What synthetic data can be used for

- The benefits of synthetic data

- Concerns surrounding synthetic data

- Synthetic data’s growing impact on AI development

What is synthetic data?

AI-powered synthetic data is data that’s been artificially created rather than collected from real-world events, phenomena, or people. It simulates the characteristics and statistical properties of genuine data, but it doesn't come from actual occurrences or real individuals.

Synthetic data created via AI serves as a powerful tool for overcoming common data challenges. It can be generated in vast quantities, tailored to specific scenarios, and kept free of personal information.

For these reasons, synthetic data is invaluable for training models, testing systems, and validating algorithms. In particular, it enables developers to build and refine AI models with rich datasets while sidestepping privacy concerns and data limitations.

Why is synthetic data important?

Synthetic data addresses key pain points in data collection and usage. It helps overcome the limitations of real data, such as scarcity, privacy and confidentiality concerns, and cost and feasibility of collection. With special attention to how the synthetic data is generated and post-processed, it can also help erase indesirable biases inherent in real data. Armed with synthetic data, you get a steady, abundant supply of training material without the complications of dealing with real-world data that is sensitive, incomplete, or that reinforces historical inequities.

Consider this: You’re developing an AI model to handle HR support tasks in a multinational company. The AI needs to manage complex queries about employee benefits, process various leave requests, and handle sensitive personal information that has been recorded all over the world, in various languages. Real data might be limited due to multiple complex privacy and data storage regulations that span different jurisdictions, potentially unbalanced due to uneven representation of employee scenarios, or just plain difficult to manage due to its sensitive nature.

With synthetic data, you can create a detailed dataset that mirrors the real HR interactions the AI will face. For example, you might generate simulated scenarios such as a diverse set of employee benefit inquiries, including questions about health insurance, retirement plans, and parental leave, as well as various types of leave requests, like sick leave, personal time off, and family leave, complete with nuanced details and varying urgency. If the stream of real data is unbalanced or does not provide enough demonstrations of what happens in the tail of the distribution cases, you can oversample specific important scenarios not present frequently enough in the underlying real dataset used for training your system to ensure your AI model will react competently and confidently when it encounters such scenarios in production.

In this example, synthetic data provides a robust and ethical solution for training AI systems. It ensures you have the diverse, abundant, and unbiased data needed to build effective and fair AI models, enhancing their performance in real-world HR support tasks.

Synthetic data vs. real data

Synthetic data and real data serve different purposes but complement each other. Real data comes from actual events or interactions, offering authenticity and firsthand insight. The availability of real data can sometimes be limited, biased, or involve sensitive information, making it challenging to use widely. In some cases, obtaining real data within a reasonable timeframe may not be feasible, such as with rare events (e.g., a comet passing or meteorologists capturing unusual climate conditions).

Synthetic data, on the other hand, is generated to replicate the properties of real data while avoiding these issues. It allows for the creation of large, diverse datasets without privacy concerns and while mitigating for biases. Though it may not capture every nuance of real-world scenarios, it provides a practical solution for training and testing AI models effectively.

Different types of synthetic data and how they compare

Synthetic data comes in various forms, each serving different purposes and offering distinct benefits. Here’s a look at the main types and how they compare:

Generative data

Created using algorithms like generative adversarial networks (GANs), variational autoencoder (VAEs), transformers, or Gaussian copula, this type of synthetic data mimics the statistical properties of real data.

A large sample of real data is used to teach a model how the data points relate to each other. Then, the model generates new, synthetic data that mirrors the original patterns. This synthetic data looks similar to the real data but is new and diverse. It’s helpful for creating large datasets, though it might not capture every subtle detail of the real world.

Self-play

Yes, machines now win at the ancient Chinese board game of Go even when they play against such celebrated professional dan players as Ke Jie, Lee Changho, and Lee Sedol. And the amazing thing is that AphaGo Zero, the latest evolution in neural networks that play Go, did not use any human data at all while learning the game. AlphaGo Zero started from scratch, i.e. from completely random play, and acted as… its own teacher! The neural network, beginning at the stage when it knew absolutely nothing about the game of Go, played against itself, and in the process was tuned and updated to predict moves, as well as the eventual winner of the games. Google’s DeepMind, the creator of AlphaGo, stated that AlphaGo was “able to learn tabula rasa from the strongest player in the world: AlphaGo itself”. After 40 days of self-training, AlphaGo Zero outperformed the previously strongest version of AlphaGo called “Master” which in turn had defeated the world’s number one human player. And AlphaGo Zero exhibited emergent creativity: it developed unconventional moves and novel techniques to play the ancient game.

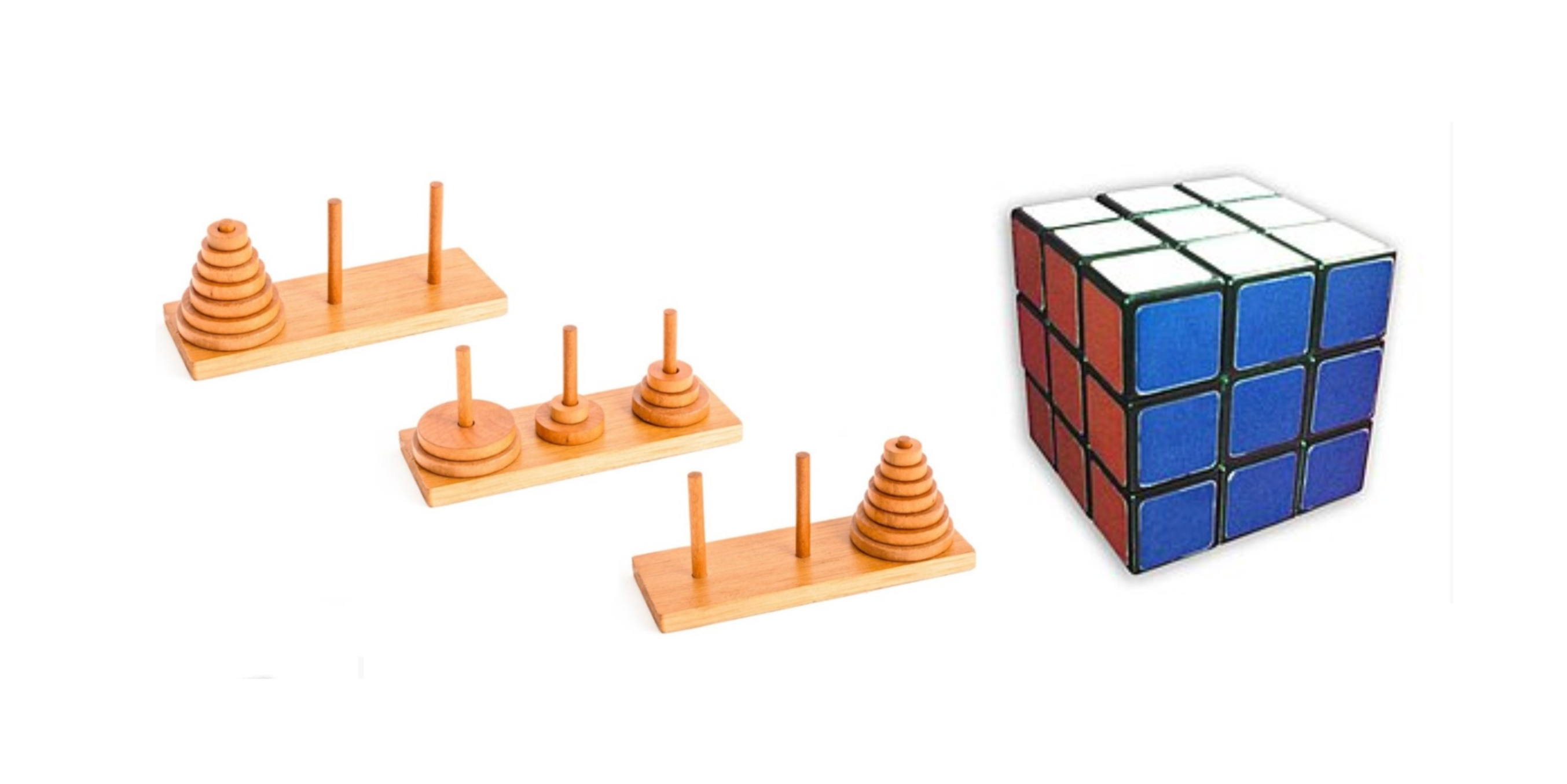

Self-play has also been used in other games, such as chess, and in computer programming. For example, Microsoft research used self-play to generate and verify the solutions to synthetic programming puzzles of the kind where, as in Rubik’s cube or Towers of Hanoi puzzles, it is hard to find a solution but when a solution is found, it is immediately obvious to a human that the solution is correct, as shown in the image below.

The pipeline for synthetically generating these programming puzzles was as follows: initially, a language model used a set of human-written puzzles for training; then, it generated its own puzzles synthetically; then, it made 100 attempts to solve those synthetic puzzles; then, a Python interpreter filtered the candidate solutions for correctness; finally, the language model improved itself by further training on these verified correct solutions to synthetic puzzles, and the next iteration of the process began (the process repeated itself). To test that indeed this pipeline led to significant improvements, the researchers evaluated it on a carefully guarded unpublished set of held-out test puzzles written by champion humans, i.e. competitive programmers.

Useful in game play and coding, can self-play also help with textual tasks? This question remains an open challenge. Competitive games, also called zero sum games – a game theoretic concept – are necessarily such that one loses, another wins, or vice versa. But in human textual tasks, there is usually some degree of cooperation involved, and in such settings self-play no longer shines, possibly because in self-play the agent only ever needs to learn to effectively coordinate with self, making for a poor partner at cooperative play, hypothesize Google Deepmind researchers in the 2021 paper Collaborating with Humans without Human Data. However, in the recent paper Efficacy of Language Model Self-Play in Non-Zero-Sum Games, Berkeley researchers empirically demonstrate that self-play can be effective in semi-competitive and fully collaborative game settings, too.

One of their hypotheses to explain this unexpected experimental finding is quite fascinating: “self-play with pretrained language models might actually function more similarly to population play, since large language models are trained on text from a population of users and may simulate different personas in different contexts”.

Further, part of the challenge in many textual tasks is the lack of an objective feedback loop about success. With puzzles and with Go, chess, backgammon, checkers, and other games of skill, the agent playing against self can tell whether what it did worked or not, but textual tasks don't have that kind of automatic verifiability / objective feedback signal.

One approach to overcome this impediment is having another model provide that signal—the "reward" model in RLHF—which is itself synthetic data! Yet another novel method, called SPIN, an acronym for Self-Play fIne-tuNing, aims to convert a weak LLM to a strong LLM by unleashing the full power of human-annotated data collected at the supervised fine-tuning (SFT) stage. There is only one model in this method and it plays against the previous iteration of itself. It does not need additional human annotated data and it does not require feedback from a stronger model.

UCLA researchers who introduced this method tested it experimentally and showed that it was able to outperform models trained with additional human or AI feedback. And the game the model played involved distinguishing human- generated from synthetic data without any direct supervision. Synthetic data, in turn, was generated by an earlier version of the same model in order to resemble the human-annotated SFT dataset and make it as difficult as possible to determine which is which.

Rule-based data

This data is generated based on predefined rules, constraints, and parameters established by humans. For example, you can devise a rule for generating mock transactions at random but within a certain range that is close enough to the actual data range and subject to certain restrictions, such as that mock generated locations of the transactions exist in the real world (neither San Francisco, WA, nor Seattle, CA). Or you can generate a synthetic dataset with hourly phenomenon observations by using interpolation from authentic available daily observations, creating a smoother time series. This method is typically used for specific scenarios where exact conditions, such as the underlying distribution, are well-understood. While it’s less flexible than generative data, it ensures consistency and relevance.

Rule-based synthetic data is often used when real data is not clean enough: for instance, authentic data may have missing values, some errors or inconsistencies, and removing or correcting them creates a partially synthetic dataset that meets the organization’s requirements for data quality and integrity.

Simulated data

Simulated data is generated through simulation models that replicate real-world processes or environments. This type is valuable for scenarios requiring controlled conditions and can be tailored to simulate various outcomes. You can simulate the spread of infectious diseases in a population, the movement of vehicles in a city, the interactions of individual players in a multiplayer game, or the behavior of traders, market makers, research analysts, and investors in a market.

One use case for simulated synthetic data is arriving at a successful proof-of-concept before engaging in a large scale expensive collection of real world data. With simulated synthetic data, it is possible to test hypotheses which then leads to well-informed decisions about the type of data that will need to be collected in the wild and the data collection methodologies.

Hybrid data

Hybrid data combines elements of generative and rule-based approaches. This type balances statistical modeling and deterministic control, allowing for the flexible creation of diverse datasets that merge specific attributes or constraints with realistic representations

How good is the quality of synthetic data?

The quality of synthetic data depends on its generation process. When created with advanced techniques like GANs or VAEs, synthetic data can closely mimic real data, maintaining high accuracy and relevance.

Metrics are used to determine whether synthetically generated data is aligned closely enough with real data. Such metrics evaluate distributional similarity between the authentic and the synthetic data by comparing summary statistics and correlations between variables within datasets, or they look at how hard it is to distinguish the synthetic from the authentic data points.

Synthetic data’s effectiveness relies on the quality of the algorithms and validation processes used. Well-generated synthetic data can be reliable and useful for training and testing AI models, but it’s important to continuously validate and refine it to ensure it meets the required standards.

What is synthetic data used for?

Synthetic data has a range of practical applications, especially in fields where real data is scarce or problematic. Here’s how it’s used:

AI training

Synthetic data is widely used to train AI models, offering diverse and controlled datasets that enhance model performance while minimizing privacy risks, reducing undesirable biases and in some cases, where socially desirable, injecting appropriate biases to reduce inequities.

For an example of a controlled synthetic dataset, consider one that mimics real world survey responses on a sensitive topic and that not only doesn’t infringe on the privacy of survey responders but also has all the data fields filled, whereas a real dataset typically has missing fields, since, as humans, survey responders are prone to fatigue and inattention that lead them to skip answering some questions.

For an example of a synthetic dataset that introduces a socially desirable bias, consider a dataset used to train AI to detect skin cancer. On darker skin, there may be less of a color contrast between certain malignancies and healthy skin, and cancerous lesions can be harder to spot. To help the algorithm get better, it may be desirable to oversample images with a darker skin tone and mimic their characteristics in a privacy-preserving synthetically created dataset that is then used to train the model to assist dermatologists in detecting skin cancer.

As a separate problem that historically led to late cancer diagnosis and therefore poor prognosis for patients with dark skin, educational resources used to train future dermatologists overwhelmingly featured pictures of very light skin. To compensate for this inequity, it is appropriate to deliberately oversample from the historically invisible and marginalized group of dark skin patients for a beneficial effect – an explicitly equitable injected bias. Co-authors of the 2021 article “Not all biases are bad: equitable and inequitable biases in machine learning and radiology“ call this approach creating “biases on purpose.”

Supervised fine-tuning

With a foundation model pre-trained on a large corpus of public domain data, it can be difficult to get adequate performance on narrow domain data that is absent from the public domain, such as enterprise-internal employee support tickets or highly sensitive electronic medical records (EMR) stored inside healthcare organizations. This is because the training of the foundation model on the public domain did not expose it to enough examples of the narrow domain data.

The solution is often supervised finetuning (SFT) which teaches the model the specific language patterns and statistical interrelationships within the narrow domain by providing a dataset of annotated data. However, human annotation can be impractical or too costly in terms of time and money. In some cases, synthetically created labeled examples come to the rescue. And a hybrid approach whereby the process is seeded with small scale manual annotation and then scaled up synthetically can work well.

Model testing

Before deploying AI systems, synthetic data can be used to test and validate models in a controlled environment. This ensures they perform well under different scenarios without the risks associated with real data. Synthetic data offers an alternative to the traditional method of replicating real data from the production environment to the staging environment. The traditional method poses security risks – what if production data ends up somewhere else? – and data integrity risks – what if production data becomes corrupted in the process of its replication? The synthetic method delivers a risk mitigating solution.

Enhancing privacy and enabling data sharing

By using synthetic data, organizations can avoid exposing sensitive information. It’s a way to develop and test systems while protecting user privacy. And privacy-preserving data is conducive to wider and accelerated sharing, a benefit especially palpable in life sciences research and treatment development with the ultimate goal to enhance human health.

Filling data gaps and augmenting data in helpful ways

When real data is missing or insufficient, AI-generated synthetic data can be used to fill the gaps, preserving the original data’s characteristics more accurately than many traditional methods, which often distort the data’s true distribution. And synthetic datasets help to focus on important minority populations in datasets.

One common use case here is fraud detection in financial services: the overwhelming majority of financial transactions are made in good faith, with the tiny percentage of fraudulent transactions representing an anomaly of sorts, but an effective fraud detection algorithm needs a plethora of diverse examples of such anomalies to learn to detect and react to fraud in a production environment. Synthesizing data enhances the training corpus with varied anomalous examples, leading to more performant models.

The data augmentation use case for synthetic data is one we have covered in our AI Explained video byte before. In short, models trained on small datasets tend towards overfitting, which leads to poor performance in production on unseen data. In essence, models learn to pay too much attention to specific details in the small training dataset, erroneously thinking that those specific, often spurious, details are fundamental to understanding the data. Synthetically augmenting the small dataset allows to introduce variation in inputs, forcing the model to learn broad and truly fundamental patterns and disregard changeable details. That increases the model’s predictive power.

Simulating scenarios

Synthetic data enables the simulation of various scenarios and outcomes, which is crucial in situations where real-life experimentation is too dangerous, unethical, or resource-intensive. For example, it can be used to test self-driving cars in hazardous situations or model medical interventions without risking patient safety.

Agentic AI and synthetic data

Thinking back to the Human Resources examples introduced at the beginning of this blog post, imagine an HR AI agent that automates and streamlines traditionally time-consuming and repetitive manual HR processes.

Rather than simply answering employees’ questions about benefits and leaves, it provides an end-to-end solution to benefits and leave management, planning and executing complex functions in a chained sequence. The use of synthetic data, as described above, to create an augmented and robust training dataset enables the AI agent to respond to corner cases as skillfully as to garden variety cases, and do that in any language spoken by a human user who needs help.

Advantages of synthetic data

Synthetic data offers several key advantages in the realm of AI and machine learning. First and foremost, it provides an essentially limitless supply of tailored data, purpose-built to meet specific requirements. This potentially unlimited source of customized information allows researchers and developers to generate data up to precise specifications, without the limitations often encountered with real-world datasets.

One significant benefit of synthetic data is its ability to train AI models faster and more efficiently. This is particularly evident in addressing the challenge of data drift, where machine learning model performance deteriorates over time as new data deviates from the historical information in training corpora. Regularly retraining models on the full scope of actual data to keep pace with temporal changes can be prohibitively time-consuming and computationally expensive.

Synthetic data offers a solution by helping machine learning models retain their predictive power in the face of data drift. It allows for quick simulation of different scenarios regarding how future data might react to past drift, enabling inexpensive model retraining on either purely synthetic data or a combination of synthetic and genuine data, as privacy regulations permit.

Another crucial advantage is the ability to inject more variety into datasets. Real datasets are often unbalanced, leading to biased outcomes. A frequently cited example is recruitment algorithms that perpetuate gender discrimination due to training on historical data predominantly featuring men in tech roles. Synthetic data can rebalance such datasets, promoting fairness in AI applications.

Moreover, it can address insufficiencies in data diversity caused by natural distributional phenomena. For example, while electronic medical records (EMR) may have enough data for studying common conditions like hypertension or Type II diabetes, they often don’t provide sufficient information for rare diseases like necrotizing enterocolitis or narcolepsy. Synthetic data can help fill these gaps, offering the variety needed for thorough research and analysis.

By offering enhanced flexibility and the ability to reduce bias and vulnerability to overfitting, synthetic data continues to evolve in its benefits. It proves invaluable in scenarios where real data is scarce, imbalanced, or subject to privacy constraints, enabling the development of more robust, fair, and effective AI solutions across various domains.

Synthetic data risks

While synthetic data offers numerous benefits, it's important to be aware of several concerns associated with its use. One significant issue is the risk of model collapse, also known as the curse of recursion. This phenomenon occurs when models are trained iteratively or recursively on data they themselves generate, leading to a progressive loss of the tails of real data distribution and eventual degradation of model quality. Julia Kempe, co-author of "Model Collapse Demystified: The Case of Regression," vividly illustrates this concept with the image of a dragon eating its own tail.

However, it's crucial to note that model collapse is not an inevitability. Researchers argue that a more realistic scenario involves synthetic data accumulating alongside rich real data, rather than entirely replacing it. This approach can mitigate the risks associated with purely synthetic data generation. While synthetic data on its own, without human intervention, may risk model collapse, incorporating human oversight and real-world data can lead to better outcomes.

Quality assurance presents another challenge in the realm of synthetic data. Ensuring that synthetic data accurately reflects real-world scenarios is a complex task that requires sophisticated algorithms. This concern is closely tied to the need for continuous validation to guarantee that synthetic data remains relevant and effective for training and testing AI models.

Bias is a further consideration when working with synthetic data. If the original data used to generate synthetic datasets contains biases, these can be inadvertently transferred, potentially impacting the fairness of AI models trained on this data. To address this issue, thoughtful techniques are necessary to identify and remove undesirable biases, promoting the development of fair and equitable AI systems.

These concerns underscore the importance of careful implementation and ongoing monitoring when utilizing synthetic data in AI and machine learning applications. While synthetic data offers powerful capabilities, it requires diligent management to ensure its benefits are realized without compromising the integrity and fairness of AI models.

Safety and ethical considerations of synthetic data

As mentioned, synthetic data is designed to replicate the statistical properties of real data without including any actual personal information. This inherent anonymity makes synthetic data a safe alternative for training AI models, as it eliminates the risk of exposing sensitive or personally identifiable information (PII).

By using synthetic data, organizations can protect sensitive information while still benefiting from the data's utility. This is particularly crucial in industries like healthcare, finance, and human resources, where data breaches can have severe consequences. Synthetic data allows for the development and testing of AI systems in a way that ensures compliance with data protection regulations and maintains user privacy.

Another ethical challenge of synthetic data is managing biases—both the perpetuation of biases present in the original data and the introduction of new biases not found in the underlying real data. If not carefully controlled, these biases can lead to unfair or discriminatory outcomes in AI models. To mitigate this, it’s essential to:

Analyze original data: Thoroughly examine the original data for biases before using it to generate synthetic data.

Refine algorithms: Develop and refine algorithms to produce synthetic data that is free from these biases.

Conduct regular audits: Conduct regular audits of synthetic data and the AI models trained on it to ensure fairness and accuracy.

Generating synthetic data should adhere to high ethical standards to ensure its responsible use. This involves:

Transparency: Clearly documenting how synthetic data is generated and used, allowing stakeholders to understand the process and trust the data’s integrity.

Accountability: Establishing accountability measures for the creation and application of synthetic data, ensuring that it is used to benefit all users and does not harm specific groups.

Continual improvement: Committing to the continuous improvement of synthetic data generation techniques to enhance their safety, accuracy, and fairness.

By addressing these safety and ethical considerations, organizations can leverage synthetic data effectively while maintaining trust and upholding high standards of data privacy and integrity.

Synthetic data for privacy protection: How does synthetic data compare to traditional data anonymization techniques?

Synthetic data and traditional anonymization techniques both aim to protect privacy, but they differ significantly in their approaches and outcomes. Synthetic data involves creating entirely new data points aligned with the original dataset, while traditional anonymization methods alter existing data.

In terms of anonymity, synthetic data generally offers stronger protection by generating new data points rather than masking existing ones. This approach can reduce the risk of re-identification that sometimes occurs with traditional methods. However, it's important to note that the level of privacy protection can vary depending on the specific techniques used in both synthetic data generation and traditional anonymization.

Regarding data utility, synthetic data often preserves utility more effectively than traditional anonymization techniques, which may degrade data quality through masking or aggregation or binning. This can make synthetic data potentially more helpful for AI training and analysis.

Synthetic data also offers opportunities for addressing bias. When properly designed, synthetic data generation can help reduce biases present in original datasets. Traditional anonymization, on the other hand, may inadvertently preserve existing biases.

While synthetic data shows promise in balancing privacy, utility, and fairness, it's important to be aware of the strengths and limitations of both approaches. The effectiveness of either method depends on various factors, including the specific techniques employed, the nature of the data, and the intended use case. Organizations should carefully evaluate their needs and consult with data privacy experts to determine the most appropriate approach for their specific circumstances.

Advantage | Synthetic data | Traditional anonymization |

Anonymity | Creates entirely new data points, mitigating the risk of re-identification. | Masks or alters existing data, risking re-identification. |

Data utility | Aims to maintain statistical properties, but for textual data it can be challenging. | Pseudo-anonymization techniques like data masking often maintain statistical properties of the underlying dataset better. |

Bias reduction | Can be designed to eliminate biases from original data. | May still reflect inherent biases in the original data. |

Overall advantage | Safer, more effective for privacy, utility, and fairness. | Less effective, with potential privacy and bias issues. |

The future potential of synthetic data

The potential of synthetic data in AI technology has been recognized for years, with industry leaders discussing its promise long before it reached mainstream attention. As AI continues to evolve, synthetic data is poised to play an increasingly crucial role in its development and application across various sectors.

Synthetic data offers a powerful solution to many challenges faced by enterprises in their AI initiatives. It provides a way to overcome data scarcity, enhance privacy protection, and improve the quality and diversity of training data. As organizations continue to digitize and seek competitive advantages through AI, the demand for high-quality, diverse, and ethically sourced data will only grow.

Looking ahead, we can expect to see synthetic data revolutionizing several key areas in enterprise AI:

- Accelerated AI model development: By providing a virtually unlimited supply of diverse, tailored data, synthetic data can significantly speed up the process of training and testing AI models.

- Enhanced privacy compliance: As data privacy regulations become more stringent, synthetic data offers a way for enterprises to innovate without risking customer data exposure.

- Improved fairness and bias reduction: Synthetic data generation techniques can be designed to address and mitigate biases present in real-world data, leading to fairer AI systems.

- Cost-effective scaling: Generating synthetic data can be more cost-effective than collecting and annotating real-world data, especially for large-scale AI projects.

- Simulation of rare scenarios: In industries where certain events are critical but rare (e.g., fraud detection in finance or handling outages in grid modeling), synthetic data can help create robust models without waiting for real-world occurrences.

As techniques continue to refine and new applications emerge, synthetic data is set to play a pivotal role in shaping the future of enterprise AI. It promises to democratize AI development, allowing organizations of all sizes to leverage advanced AI capabilities without the need for massive real-world datasets.

The journey of synthetic data from a background concept to a transformative tool in AI underscores its vast potential. As we move forward, synthetic data will likely become an integral part of the AI strategy for forward-thinking enterprises, driving innovation, efficiency, and competitive advantage across industries.