Table of contents

When it comes to unlocking AI's potential, some might picture GenAI engineers, armed with nothing more than an API key, a database, and a chatbot, each furiously working to master AI's capacities.

But I believe that truly leveraging AI will come not from solitary expertise, but by collaboration and seamless integration into a company's existing applications and workflows. But how is this collaboration possible?

Across the many different capabilities of LLMs, at Moveworks we believe ‘function calling’ - the process of invoking or executing a predefined procedure or routine within the software to perform a specific task to be one of the most important capabilities that GenAI platforms require.

This is the critical capability that engineers and enterprises alike should seek out in order to get the most value from GenAI solutions and to build AI experiences that will capture the attention and ongoing adoption of end users.

So… what is ‘function calling’?

Think of function calling as the process where an AI system autonomously selects and triggers a specific function to execute a task. It enables architects and developers to naturally integrate their own capabilities into GenAI experiences without ever having to manage the complexities of GPUs, LLMs, APIs… and all the other fancy 3 letter acronyms associated with this rapidly evolving space.

Actual photograph of an AI engineer waiting for answers to be returned from the latest LLM.

Actual photograph of an AI engineer waiting for answers to be returned from the latest LLM.

Function calling is what we believe sets apart a successful AI deployment from “toy” prototypes. Once chatbot, then copilot and now AI agents… our experience at Moveworks has taught us that end users demand flexible, interactive solutions that can (seemingly) intuitively understand needs and take action. However, there’s an evolutionary leap required to go from LLMs to an AI agent. This is because successful enterprise AI requires a sophisticated approach that goes beyond simply implementing language models.

Achieving this transformation demands careful design, management, and refinement to ensure the specific needs and challenges of the enterprise environment are met.

The great divide: consumer vs. enterprise AI differences

Let’s begin with the obvious reason leaders are exploring generative technologies: Generative AI landed on the map in 2023, demonstrating that it had the potential to capture the engagement of millions across many different modalities and channels.

From there, the argument for consumer AI has spread like wildfire: these applications, driven by the direct needs and decisions of the user, leverage AI to streamline personal tasks through a single interface.

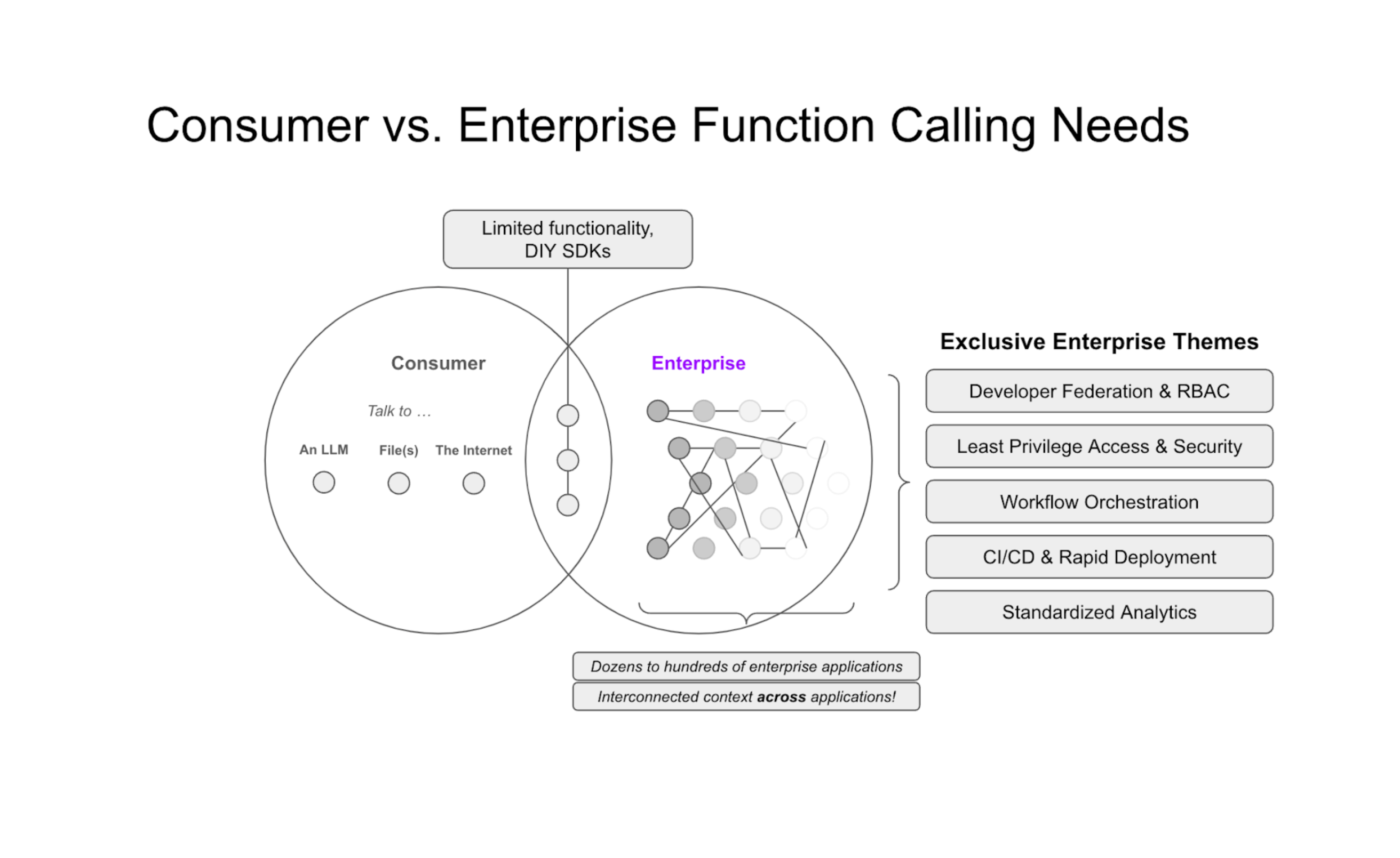

Enterprises, however, navigate a labyrinth of interwoven systems, complex workflows, and multifaceted approval chains. The effectiveness of AI in this realm isn't just about facilitating conversations but about understanding and maneuvering through a dense network of dependencies.

The initiative to adopt AI is in flight - one McKinsey report found that global adoption of AI in at least one business function has risen to an astounding 72% in 2024, up from around 50% in recent years. However, this is where we have often found that organizations struggle to achieve scale. Siloed AI agents can result in duplicative work, bespoke implementations and conflicting qualities of experience. How organizations scale beyond a single business unit is essential.

Align the ease of consumer AI applications with enterprise demands

Enterprise AI must become contextually aware—not just of data but of the nuanced roles, responsibilities, and access levels of individuals within the organization.

But complexity doesn’t always equate to effectiveness. Simply buying the AI software that offers every bell and whistle is no guarantee of success. This misconception overlooks the reality that intricate features—no matter how advanced—do not inherently safeguard against enterprise risks, such as data leaks.

This emphasizes the importance of the architectural imperative: Success in your enterprise AI strategy depends on the underlying architecture. It's not solely about the version of the model offered, but how these solutions are fundamentally designed to operate within and for the enterprise.

The diagram below illustrates the great divide between the complexities of consumer GenAI solutions as compared to the sprawl of internal systems that employees must navigate in order to get work done.

Why LLM architecture matters for AI agents

Spring forward now to the present: AI agents, with their remarkable reasoning and actioning abilities, are born from the fusion of advanced reasoning, process management, and customization with the innate capabilities of large language models (LLMs).

But AI agents are not simply a byproduct of the inherent abilities of LLMs; they are a deliberate construction, imbued with attributes that ensure consistency, adherence to norms, and explainability.

In a way, LLMs often serve as the "brain" of AI agents, enabling them to understand user instructions, communicate effectively, and gather information. These characteristics do not spontaneously emerge; they require meticulous management and refinement.

While LLMs provide the underlying language understanding, AI agents are distinct entities that take validated plans from the LLM and human, and action them in the background. Important characteristics of AI agents include:

- Consistency: Delivering predictable and reliable outputs.

- Normative Adherence: Complying with relevant standards, data types, business policies and APIs.

- (AI) Explainability: Providing clear and justifiable rationale for their actions and decisions.

This agentic engineering process involves embedding reasoning and tools for an LLM to leverage. This transformation empowers the LLM to act autonomously, make informed decisions, and learn from its interactions with the environment and user.

The effectiveness of an AI agent depends heavily on its reasoning tools, which enable interaction with the world. These tools are crucial for tasks like information retrieval or action execution, directly impacting the agent's ability to process and engage with user context.

Prioritize deep AI agent development capabilities

The pressing question for organizations looking to venture into the realm of AI agents is not just, "why should I use AI?" but also, "where should I focus?".

The allure of simpler AI implementations might capture the imagination, for businesses looking to leverage AI for serious, value-driven outcomes, simpler does not mean easier and often fails to meet enterprise needs. The key to unlocking these outcomes often lies in function calling.

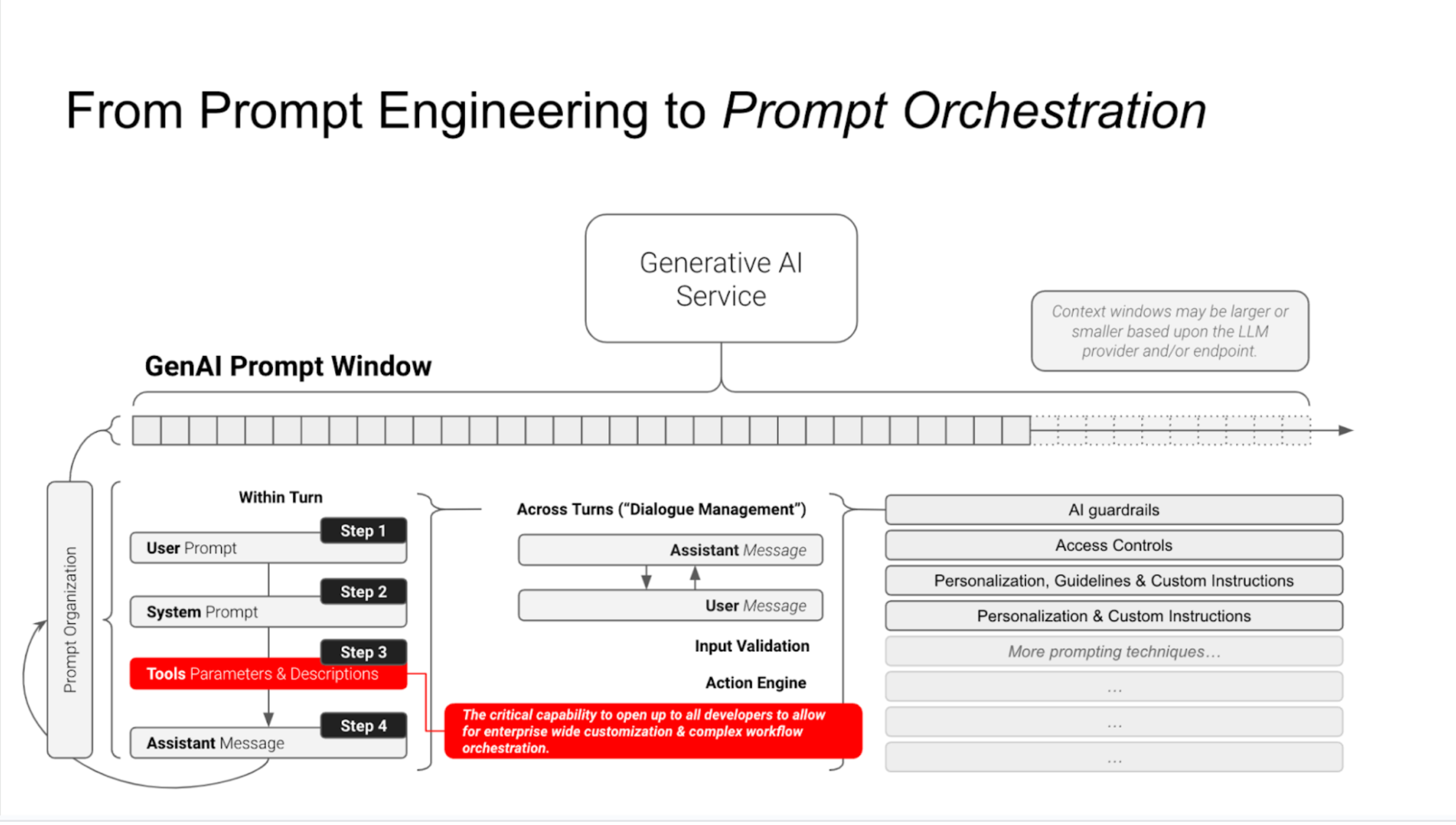

This illustrates the many layers of GenAI services where developers can get trapped trying to debug errors within a conversational AI experience. The red indicates where Moveworks prioritizes engagement for developers.

This illustrates the many layers of GenAI services where developers can get trapped trying to debug errors within a conversational AI experience. The red indicates where Moveworks prioritizes engagement for developers.

Diving into function calling

Function calling embodies principles familiar to any seasoned software engineer or system architect, such as rules-based access controls, traceability, analytics, and collaborative development environments.

By adhering to these established standards, GenAI can be delegated to non-centralized teams across different systems and workflows, propelling the customization and integration of AI capabilities into the realm of possibility.

It's worth noting that developing such integrations from scratch involves substantial investment in terms of time, resources, and expertise, with the cost of building AI ranging from tens of thousands dollars for specific, narrow AI tools to costing hundreds of thousands or even over a million dollars for an enterprise-wide solution.

Master Agentic AI deployment through reasoning and function calling with Moveworks

Allowing developers to focus on "function calling" is what I believe differentiates a successful enterprise AI deployment from mere "toy" prototypes by allowing them to leverage predefined, reusable functions that perform specific tasks.

This is where Moveworks comes into the picture. Our platform offers organizations a way to leapfrog the foundational hurdles associated with implementing function calling across enterprise systems.

AI agents come with lots of potential value, as well as shadow infrastructure that can be difficult for organizations to estimate the impact of until it is too late. Moveworks brings capabilities on top of LLMs like automatic document ingestion, AI guardrails and the ReAct generative reasoning framework.

This enables developers to focus on the specific scope of AI agent capabilities that are most important to organizations: enhancing AI agent performance and range of functionality, rather than the onerous work of building complex AI models from scratch.

At our NYSE hackathon in 2024, one of our developer participants shared that “In just a day, I ended up building multiple agents that seemed impossible.” If you’re interested in learning more about relevant enterprise AI Agent plugins that Moveworks can deploy, check out our plugin library to see how organizations get started!

Check out of our whitepaper on how our Agentic Automation Engine bridges the gap between AI agents and business systems, enabling faster development, simplified processes, and a future where AI seamlessly powers the enterprise.

Academic sources

ReAct: Synergizing Reasoning and Acting in Language Models [arXiv:2210.03629]

Language Models as Zero-Shot Planners: Extracting Actionable Knowledge for Embodied Agents [arXiv:2201.07207 (PDF)]

Demonstrate-Search-Predict: Composing retrieval and language models for knowledge-intensive NLP [arXiv:2212.14024]

In-Context Retrieval-Augmented Language Models [arXiv:2302.00083]

Improving alignment of dialogue agents via targeted human judgements [arXiv:2209.14375 (PDF)]

A Generalist Agent [OpenReview]

Blog posts and news articles

7. How GPT-3 Works - Visualizations and Animations [Jay Alammar's Blog]

8. The No. 1 Risk Companies See in Gen AI Usage Isn't Hallucinations [CNBC]

9. The State of AI Agents [LangChain]