Table of contents

The Moveworks Copilot’s reasoning engine is an agent-based AI system that showcases human-like problem solving capabilities. Our engine uses reasoning, decision-making and plugin calling capabilities of multiple LLMs to accomplish specific tasks, like those below. This enables it to meaningfully achieve an employee’s goals, combining abilities to:

- Understand a user's objective

- Develop a plan to achieve that objective

- Execute function calls according to its plan

- Evaluate the success of this execution

- Iterate on the plan until it successfully achieves its original objective

Importantly, a reasoning engine is able to check back with the user wherever necessary to seek additional context about the goal, confirm its direction, and take user input for adapting the plan on the fly.

In some ways, it attempts to mimic how human beings solve problems, which is why having a reasoning Copilot is so powerful. And from a universal support perspective, our reasoning engine scales to reliably operate within the specifics of any of our customers’ unique environments (i.e. system integrations, available plugins, business logic, processes, etc.).

Moveworks Copilot is built to be enterprise-ready

Over the last 18 months, we have all seen a wide range of demos that shine a light on the potential of generative AI to do amazing things. However, the journey from demo to enterprise-ready continues to be challenging because it is difficult to achieve the elusive balance of delighting users and harnessing the dynamism of LLMs to provide enterprise-grade assurances.

At Moveworks, we know what it takes to support the needs of millions of users and hundreds of customers every day, and we have created the Reasoning Engine to bring the power of Generative AI to deliver impact to every organization.

In this post, we’ll dive into four important ways through which the Reasoning Engine makes the Moveworks Copilot enterprise-ready, and why you should care:

1. Adherence to business rules and policies

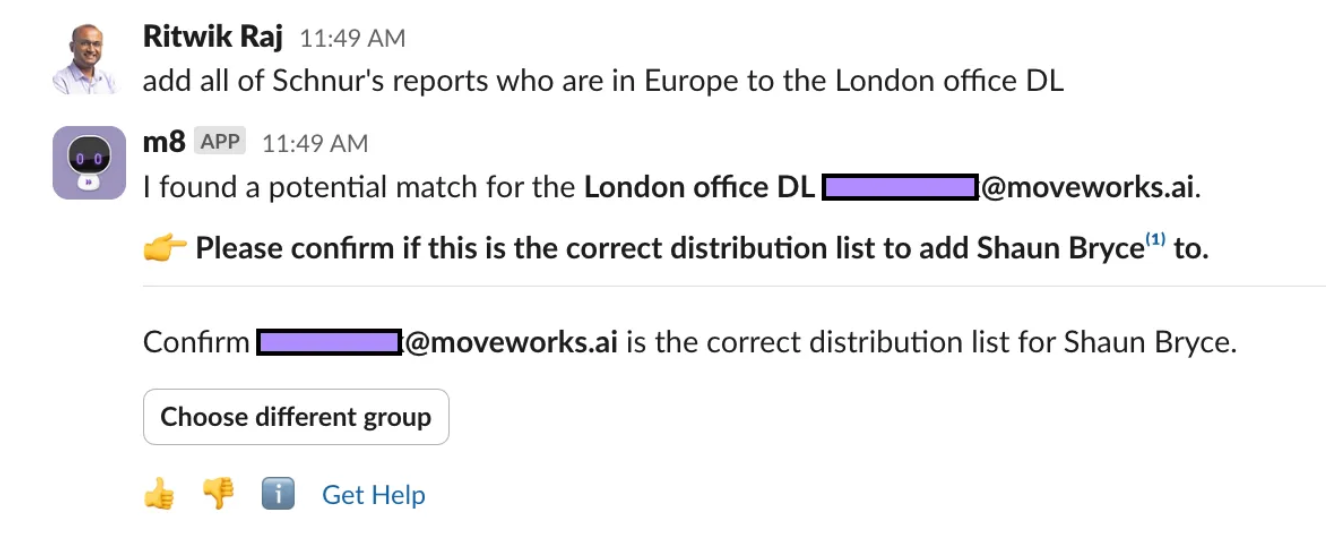

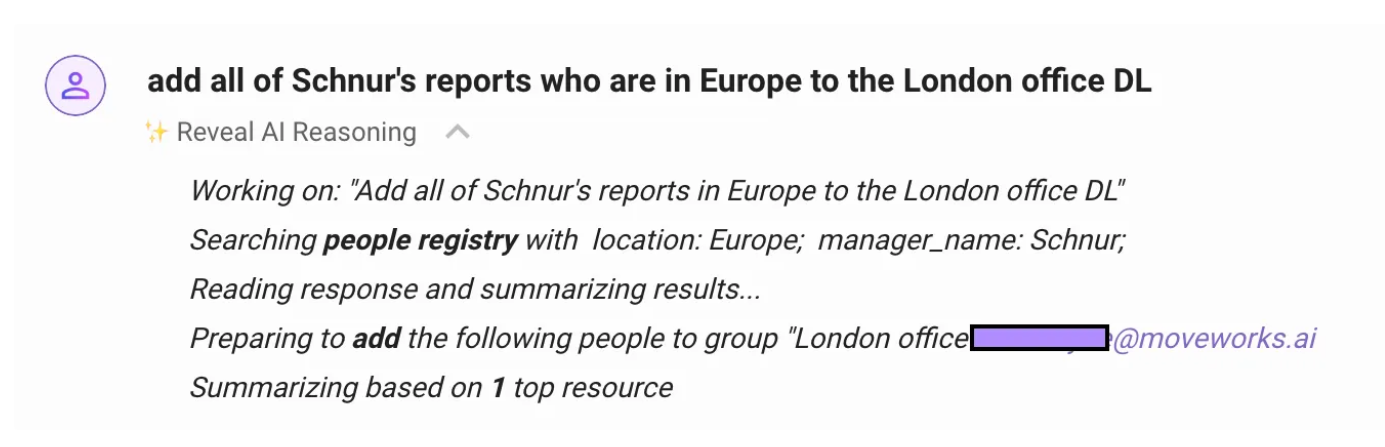

The Reasoning Engine breaks down complex requests into smaller tasks and devises plans to execute these tasks in sequence. However, several of these tasks require actions to be taken that result in edits in systems of record such as an ITSM, HRIS, or CRM system.

These systems are more than just authoritative information sources; they are also operated with business rules and processes that determine how changes are made, and who can make or approve such changes. These business processes are unique to each organization, and it’s essential for any AI system to follow these rules when updating information.

Breaking down a complex and multi-step request with plugin selection and reasoning

Breaking down a complex and multi-step request with plugin selection and reasoning

Reasoning steps showing multiple plugins called autonomously

Reasoning steps showing multiple plugins called autonomously

From the outset, we aligned the Moveworks Copilot’s reasoning with these configured business rules. In the Copilot’s architecture, these policies are encoded into the reasoning and action execution through a combination of instructions and rules. Over the last six months, we have added enhanced reliability and checks for compliance with policies and instructions, enabling customers to build their own use cases and handle situations that require stronger guarantees on processes such as approvals, confirmations, and conditions to offer certain resolutions.

A key example is helping to ensure that the Copilot only offers and takes actions that are allowed based on the use cases (or plugins) that are enabled in the customer’s environment. Also, these plugins can be configured to offer specific types of resolutions to employees (e.g. a customer may elect to allow employees to leverage Moveworks’ native automation for resetting passwords, or they may choose to direct employees to a self-service portal). The Reasoning Engine is aware of these business rules and offers options that are compliant with the rules.

These policies also enable the Reasoning Engine to collect required information as part of processing requests and surface any exceptions to the employee by interpreting any errors encountered. This ensures that the employee gets a specific call to action so that they can take the next step to minimize the error and complete the task. An example is when an employee requests access to a software application that requires a business justification and/or approvals to process.

In this way, the plugin architecture and reasoning combine powerfully to enable the Copilot to be configurable and compliant, while still offering employees with a delightful conversational experience.

2. Selection of the best plugins or use cases

As we discussed in the previous post, the architecture of the Reasoning Engine is designed to work with specialized plugins, which are the set of tools or use cases deployed in customer environments. The Reasoning Engine is capable of independently selecting one or more plugins to serve the employee’s request.

Plugin selection is a critical part of the plan-execute-adapt reasoning framework because high plugin selection accuracy is required to converge on the solution to the employee’s problem. In addition, it needs to be flexible enough to use contextual information from previous requests and conversation context, as well as from previous steps taken by the Reasoning Engine in the autonomous plugin execution.

A key aspect of plugin selection is the autonomous plan-evaluate loop, which we touched upon in the last post as well. This consists of a feedback loop between the planning module and evaluator module, designed to enable the Reasoning Engine to quickly explore multiple solution approaches or paths and select the one with the highest likelihood of success.

Through 2024, we have solved several challenges with plugin selection and execution, including the ability to invoke multiple plugins simultaneously and combine their responses into one user-facing response with low latency. We have also reinforced our plugin selection framework to better handle follow-on queries and use customer-provided descriptions and metadata for custom agentic use cases to surface these unique use cases more effectively. As our customers build an ever-increasing number of agentic automations, we are well positioned to handle the increased complexity.

3. Earning the trust of the employee with clear references

The Copilot is designed to help make sure that every employee can trust the results they get, and the most important component of creating that trust is to ensure that responses are clearly referenced and that employees can easily check the sources.

This process is called grounding, and to achieve the goal of providing trustworthy reference information, we have addressed several challenges over the last few months:

There is a diversity of content that is referenceable – ticket and approval IDs, knowledge articles, PDFs, PowerPoint files, people records, room information, applications, and other kinds of organization-specific entities. All of these are relevant and important to reference – sometimes multiple types in the same response.

The “span” in the summary that must be linked to the reference can be anything from a single word to a whole paragraph. To provide a useful reference, the link must be inserted at the right place.

Relevant resources and references can be present in the conversation context from previous requests or interactions, and the Reasoning Engine needs to be able to reference those in addition to the new references that may be identified in the current or latest interaction.

Given that the Copilot is multilingual, translation should also incorporate and adjust the linking as necessary when the content is being transformed.

To achieve this, we enforce several guardrails and principles on the reasoning steps. Just to share a few:

The Reasoning Engine must invoke available plugins before generating a response.

It provides visibility into its thinking and the steps it is taking, along with the results of those steps, so that the employee is fully informed about which inputs are being considered.

References found by the plugins are linked inline within the response adjacent to where the content is used in the summary. These references are then compiled in a single pane to give the employee easy access to inspect them.

References are deduplicated to make sure that the employee is not bombarded with repetitive information.

The reasoning engine assesses if the referenced sources contain the answer to the employee’s question, and if they do not, it informs the employee that it does not have an answer to the question. It can, however, provide relevant sources for them to explore what is available.

Moveworks has a strong commitment to accuracy and reliability of results, and we continue to work toward our north star of citations completeness to enable employees to have full visibility into how their results are created.

4. Provide controllability over reasoning when necessary

We know that machine learning is extremely powerful and gets many predictions right. However, there are always scenarios where some component of the system is not behaving as expected or where the default behavior needs to be adjusted to meet the needs of a customer.

For these scenarios, it is imperative to have structured mechanisms to modify the behavior of the system. This problem is even more challenging for large language models like GPT-4 because the delightful experience they offer is based in large part on their ability to generate responses with a great deal of autonomy. They are flexible in terms of being able to respond to prompts and adapting responses, but this is not always a scalable solution for making changes that should not need user expression of preference.

We are investing in many types of override and control mechanisms to allow the behavior of the Reasoning Engine to be influenced and adapted:

We have tools to demonstrate and guide the Reasoning Engine to follow described behaviors on which plugins to select, or how to process the data received from plugins, or how to present the response back to employees.

More specifically, there are different requirements for a variety of scenarios – is the behavior change to be applied globally for all customers, or for specific customers? Is it broad enough so that it should apply to a wide swath of requests, such as general response or tone formatting, or should it be applied only in specific requests or if a certain plugin is invoked? We are building tools that allow the Reasoning Engine to operate autonomously as far as possible, but also enable interventions in rare cases to serve the needs of our users.

We have strong guardrails on preventing toxic and inappropriate content from entering the systems, but in some cases, customers need to be able to add new guardrails or relax some constraints to enable available resources to be served. We are currently testing these toxicity filter customizations.

Our goal is to make many of these tools self-serviceable by customer admins, and we will strive to provide a flexible yet safe way to make these changes while providing a high degree of performance out of the box.

Ready to see how reasoning can reshape how your employees work? Get a demo today and watch AI problem-solve employee support challenges live.

See the Moveworks Reasoning Engine in action. Sign up for a personalized demo today.